Data Source Configuration

Overview

Data Sources are how GrowthBook connects to your data warehouse so that it can pull those aggregated statistics in order to compute metrics and experiment results. Each Data Source defines how to connect to your data, what version of SQL to use when querying your data, and can provide templates for what the SQL to connect to your Data Source should look like depending upon which event tracker software you use. GrowthBook works with your existing SQL data, no matter where it is located and no matter what shape or format it is in, whether you have a strongly normalized schema, a single “events” table with JSON fields, or something in between.

Supported Event Schemas

When adding a new Data Source in Growthbook from /datasources page we first guide you to select what event tracker software you use, or you can choose custom if you don't use any of the popular third party event trackers that we support. Telling us which event tracker you use, gives us an idea on the likely shape of your data. This will help us generate the correct sql to extract out the aggregated statistics from your site with as little modification on your end as possible. Here is Growthbook's current list of event trackers that we support with links for more details on how to set them up with GrowthBook:

- Amplitude

- CleverTap

- Firebase

- Freshpaint

- Fullstory

- Google Analytics 4 (BigQuery only)

- Heap Analytics

- Jitsu

- Keen IO

- Matomo

- Mixpanel

- MParticle

- RudderStack

- Segment

- Snowplow

If you do not use any of those you choose Custom Data Source and define some of the sql yourself.

Configuration Settings

Once you have chosen your event tracker and data source type and successfully connected, you will be given an opportunity to modify your configuration settings. For many applications Growthbook will have chosen the correct configuration settings straight out of the box based upon which event tracker you choose. In some instances, you may need to tweak them slightly, or in the case of using a custom datasource, define them more explicitly.

Identifier Types

These are all of the types of identifiers you use to split traffic in an experiment and track metric conversions. Common examples are user_id, anonymous_id, device_id, and ip_address.

There are some cases where a single database column isn't enough to uniquely identify a subject. For example, you might need the combination of company and user_id. In this case, we recommend creating a synthetic identifier by concatenating all of the fields together. For example, you can create a company_user identifier and then in your SQL, select it as follows: CONCAT(company, user_id) as company_user.

Experiment Assignment Queries

An experiment assignment query returns which users were part of which experiment, what variation they saw, and when they saw it. Each assignment query is tied to a single identifier type (defined above). You can also have multiple assignment queries if you store that data in different tables, for example, one from your email system and one from your back-end.

Assignment queries are one-half of the queries that are used to generate experiment results, the other being metric queries. Assignment queries can be edited from the Metrics and Data → Data Sources page.

The end result of the query should return data like this:

| user_id | timestamp | experiment_id | variation_id |

|---|---|---|---|

| 123 | 2021-08-23-10:53:04 | my-button-test | 0 |

| 456 | 2021-08-23 10:53:06 | my-button-test | 1 |

The above assumes the identifier type you are using is user_id. If you are using a different identifier, you would use a different column name.

Here's an example query you might use:

SELECT

user_id,

received_at as timestamp,

experiment_id,

variation_id

FROM

events

WHERE

event_type = 'viewed experiment'

Make sure to return the exact column names that GrowthBook is expecting. If your table’s columns use a different name, add an alias in the SELECT list (e.g. SELECT original_column as new_column).

Duplicate Rows

If a user sees an experiment multiple times, you should return multiple rows in your assignment query, one for each time the user was exposed to the experiment.

This helps us detect when users were exposed to more than one variation, and eventually may be useful in helping build interesting time series.

Experiment Dimensions

In addition to the standard 4 columns above, you can also select additional dimension columns. For example, browser or referrer. These extra columns can be used to drill down into experiment results.

Identifier Join Tables

If you have multiple identifier types and want to be able to auto-merge them together during analysis, you also need to define identifier join tables. For example, if your experiment is assigned based on device_id, but the conversion metric only has a user_id column.

These queries are very simple and just need to return columns for each of the identifier types being joined. For example:

SELECT user_id, device_id FROM logins

SQL Templates

GrowthBook uses Handlebars to compile our SQL queries and we expose several variables and helper functions to make your SQL dynamic and reusable. This applies to experiment assignment queries, fact tables, and metrics.

Read more about SQL Templates and the different variables that are available.

Jupyter Notebook Query Runner

This setting is only required if you want to export experiment results as a Jupyter Notebook.

There is no one standard way to store credentials or run SQL queries from Jupyter notebooks, so GrowthBook lets you define your own Python function.

It needs to be called runQuery, accept a single string argument named sql, and return a pandas data frame.

Here's an example for a Postgres (or Redshift) data source:

import os

import psycopg2

import pandas as pd

from sqlalchemy import create_engine, text

# Use environment variables or similar for passwords!

password = os.getenv('POSTGRES_PW')

connStr = f'postgresql+psycopg2://user:{password}@localhost'

dbConnection = create_engine(connStr).connect();

def runQuery(sql):

return pd.read_sql(text(sql), dbConnection)

Note: This python source is stored as plain text in the database. Do not hard-code passwords or sensitive info. Use environment variables (shown above) or another credential store instead.

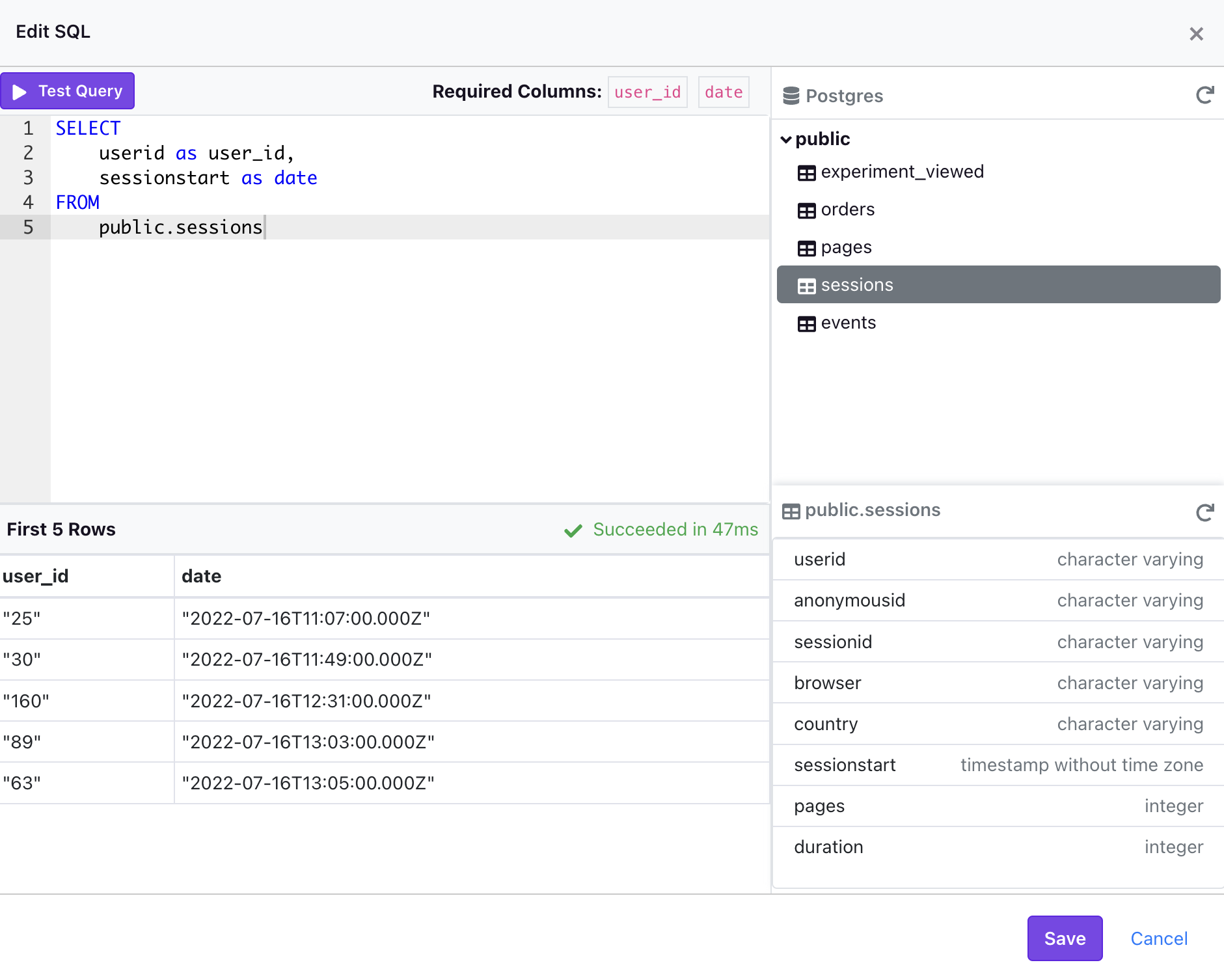

Schema Browser

When you connect a supported data source to GrowthBook, we automatically generate metadata that is used by our Schema Browser. The Schema Browser is a user-friendly interface that makes writing queries easier as you can easily explore information about the datasource such as databases, schemas, tables, columns, and data types.

Below are the data sources that currently support the Schema Browser:

- AWS Athena - Requires a Default Catalog

- BigQuery - Requires a Project Name and Default Dataset

- ClickHouse

- Databricks - Currently only supported on version 10.2 and above with a Unity Catalog

- MsSQL/SQL Server

- MySQL/MariaDB

- Postgres

- PrestoDB (and Trino) - Requires a Default Catalog

- Redshift

- Snowflake

If you added a supported data source prior to GrowthBook v2.0, you can generate the schema manually by clicking "Data Sources" on the left-nav, selecting the data source, and then clicking the "View Schema Browser" button and following the on-screen prompt.